Understanding Embeddings and Vector Databases with Qdrant

Vector Embeddings: Semantic Representations of Data

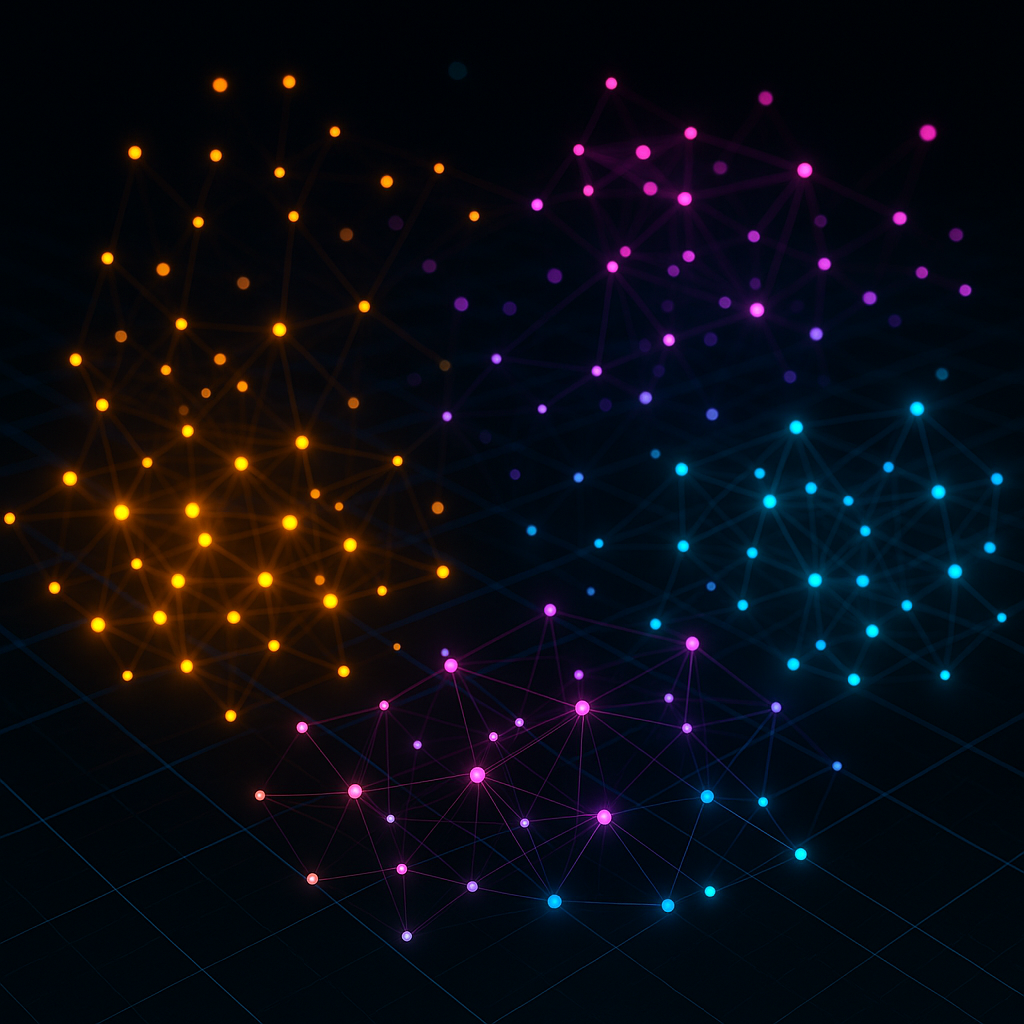

Modern AI systems convert complex data (text, images, audio) into vector embeddings numeric arrays that capture meaning. Embeddings map high‑dimensional information into a compact vector space where similar items are close together. For example, words like “king” and “queen” end up in nearby vectors because they share semantic relationships. By encoding semantics, embeddings allow machines to compare and search unstructured data efficiently. This underlies many AI applications: recommendation systems, semantic search, chatbots, and more all rely on embeddings to understand user intent beyond simple keywords.

Why Embeddings Matter

- Semantic clustering –Related items naturally form clusters in embedding space. When both your query and your documents are embedded, the k nearest neighbors are usually the most relevant—even if they share no common keywords.

- Low‑dimensional encoding –Embeddings compress rich input (an essay, an image) into a modest vector (384–1536 floats), making storage and comparison tractable.

- Real‑world applications –Recommendation engines, semantic search, and Retrieval‑Augmented Generation (RAG) chatbots all rely on fast nearest‑neighbor search over embeddings.

A new class of data stores—vector databases—are built specifically to index those vectors. Qdrant, written in Rust, is one of the most popular open‑source options.

What Is a Vector?

A vector is just an ordered list of numbers, e.g. v = [0.12,‑1.7,3.4,0.02]. Think of it as the coordinates of

a point in space:

| Dimensions | Example | Intuition |

|---|---|---|

| 2‑D | [x, y] | Point on graph paper |

| 3‑D | [x, y, z] | Point in 3‑D space |

| 768‑D | Sentence embedding | Same idea—just more axes |

A Tiny 2‑D Example You Can Draw

Assume a toy model that discovered only two latent axes:

- Animal ↔ Fruit

- Domestic ↔ Wild / Citrus

| Word | 2‑D Vector | Interpretation |

|---|---|---|

cat | [0.90,0.10] | Very animal, slightly domestic |

dog | [0.85,0.05] | Almost identical to cat |

apple | [0.10,0.90] | Strongly fruit, non‑citrus |

orange | [0.05,0.95] | Fruit & citrus |

Plotted in 2‑D, cat and dog cluster on one side, apple and orange on the other. Cosine similarity quickly tells us “dog” is nearest to “cat.”

Scaling Up

Real models output hundreds or thousands of dimensions:

| Model / API | Dimensions |

|---|---|

OpenAI text‑embedding‑3‑small | 1536 |

Sentence‑Transformers all‑MiniLM‑L6‑v2 | 384 |

| CLIP image embeddings | 512 |

The geometry is the same—you just can’t draw 1536‑D space. That’s where a vector database helps.

Key takeaway: In embedding space, distance ≈ semantic difference and closeness ≈ semantic similarity.

Generating Embeddings

Embeddings come from models such as OpenAI’s Ada family or open‑source sentence‑transformers. Example (Python):

from qdrant_client import QdrantClient

import openai

from qdrant_client.models import PointStruct, VectorParams, Distance

openai.api_key = "YOUR_OPENAI_API_KEY"

client = QdrantClient(host="localhost", port=6333)

texts = [

"Qdrant is the best vector search engine!",

"Semantic search lets you query by meaning",

]

# 1 Generate embeddings (1536‑D vectors)

resp = openai.Embedding.create(input=texts, model="text-embedding-3-small")

# 2 Create collection

client.recreate_collection(

collection_name="example_collection",

vectors_config=VectorParams(size=1536, distance=Distance.COSINE),

)

# 3 Upsert embeddings

points = [

PointStruct(id=i, vector=d["embedding"], payload={"text": texts[i]})

for i, d in enumerate(resp["data"])

]

client.upsert("example_collection", points)Vector Databases and Qdrant

A vector database is a specialized storage system for embeddings. Unlike a typical SQL/NoSQL database, a vector DB is optimized for nearest‑neighbor search in high‑dimensional space. It provides extremely fast similarity search at scale—returning the closest vectors to a query point. In effect, vector databases function more like search engines than traditional data stores: they are built to handle high‑throughput, low‑latency vector comparisons even under heavy loads.

Key features of vector databases (and Qdrant in particular) include:

- Scalability and performance – Qdrant (written in Rust) is designed to handle millions of vectors with low latency. Its architecture (often using HNSW indexes) lets it return similarity results in milliseconds.

- Flexible queries – You can filter vectors by metadata or payload, do hybrid searches (keywords + vectors), and adjust search accuracy vs. speed.

- Cloud and local deployment – Qdrant can run on your machine (via Docker) or as a managed cloud service. It exposes gRPC/REST APIs and has client libraries in Rust, Python, Go, etc., making integration straightforward.

For example, once vectors are stored in Qdrant, a semantic search query might look like:

Query Example

query = "best way to scale vector search"

q_vec = openai.Embedding.create(input=[query], model="text-embedding-3-small")["data"][0]["embedding"]

hits = client.search(

collection_name="example_collection",

query_vector=q_vec,

limit=3,

)

for h in hits:

print(h.payload["text"], "(score:", h.score, ")")Case Study: The vector‑chat Projects

Both vector‑chat (Python) and * vector‑chat‑rs* (Rust) demonstrate a complete Retrieval‑Augmented Generation (RAG) pipeline on top of Qdrant. Each project follows the same three‑stage architecture but implements it with language‑specific tooling.

1. Ingestion → Embedding → Storage

| Stage | Python version | Rust version |

|---|---|---|

| Chunking | embed_chunks_openai.py uses tiktoken to split documents into ~500‑token chunks, adding overlap to preserve context. | src/embed.rs relies on the tiktoken-rs crate to mirror OpenAI’s token counting. |

| Embedding | Calls openai.Embedding.create in batches; returns 1536‑D Ada vectors. | Uses the async-openai crate; embedding requests are executed concurrently with tokio::join! for throughput. |

| Upsert to Qdrant | Uses qdrant-client Python SDK: client.upsert(collection, points) where each PointStruct stores the embedding and the raw chunk as payload["text"]. | Employs the qdrant-client Rust crate with the same gRPC interface; vectors are inserted via client.upsert_points. |

Tip The projects choose Cosine distance, but Qdrant also supports Euclidean and Dot for different similarity notions.

2. Retrieval at Query Time

- User asks a question.

- The question is embedded with the same model.

- Qdrant returns the k nearest chunks (

limit=5in Python, configurable flag in Rust). - The text of those chunks is concatenated into a context window.

Python snippet (simplified):

results = client.search("docs", query_vector=q_vec, limit=5)

context = "

".join(hit.payload["text"] for hit in results)Rust equivalent:

let hits = client

.search_points(&SearchPoints::new("docs", q_vec).with_limit(5))

.await?;

let context = hits

.iter()

.map(|h| h.payload["text"].as_str().unwrap())

.collect::<Vec<_>>()

.join("

");3. Generation

The context is prepended to a system prompt and sent to OpenAI’s ChatCompletion endpoint:

SYSTEM: You are a helpful assistant.

CONTEXT: <top‑5 chunks from Qdrant>

USER: <original question>The LLM therefore grounds its answer in retrieved knowledge, reducing hallucinations.

Implementation Highlights

Python

- CLI –

richandargparseprovide a colourful interface:python chat_openai.py --collection docs. - Streaming output – Uses the SDK’s

stream=Trueto print tokens in real time. - Maturity – Easy to extend with LangChain or FastAPI for a web service.

Rust

- Performance – A single static binary (<10 MB) with zero Python dependencies.

- Async everywhere –

tokioruntime plus async gRPC yields high throughput on commodity hardware. - Type‑safety – Compile‑time checks prevent many runtime errors common in scripting.

Why Two Languages?

| Aspect | Python | Rust |

|---|---|---|

| Prototyping speed | Rapid; great for notebooks. | Slower initial setup. |

| Runtime speed & memory | Good enough for small loads. | Superior for large‑scale services. |

| Deployment | Requires Python environment. | Ships as a single binary. |

Running both side‑by‑side shows the language‑agnostic nature of RAG: the heavy lifting (embeddings + Qdrant ANN) is the same—only the glue code differs.

Conclusion

Vector embeddings convert raw content into math where distance encodes meaning. A vector database like Qdrant lets you store millions of those vectors and retrieve the nearest neighbors in milliseconds. The two vector‑chat repositories—one in Python, one in Rust—demonstrate how easy it is to bolt together an embedding model and Qdrant to build production‑ready semantic search and chat experiences.

Happy vectorizing!